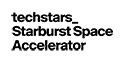

Pattern based

Neuromorphic Computing

Machine learning that thinks like a brain, learns rapidly, and explains itself completely. Because data scientists need to know why.

Small data-set accuracy

High accuracy with a fraction of the data. Resilient to noise and missing data.

Explainable AI

Provides detailed interpretation of predictions for higher confidence, more transparent decisions

Online learning

Auto detects anomalies and new classes and adapts to evolving data

Learn Faster with Less Data

The Natural Intelligence neuromorphic machine learning system is fundamentally different than current approaches because it is based on patterns – the same way human brains actually work. Our model delivers what no other solution in the past has succeeded in delivering.

Faster Learning

Speed through training with efficient methods designed to be resilient to imbalanced and iimperfect data.

Future Forward

We’re building a world in which AI works the way people think, so that people can work smarter and live better.

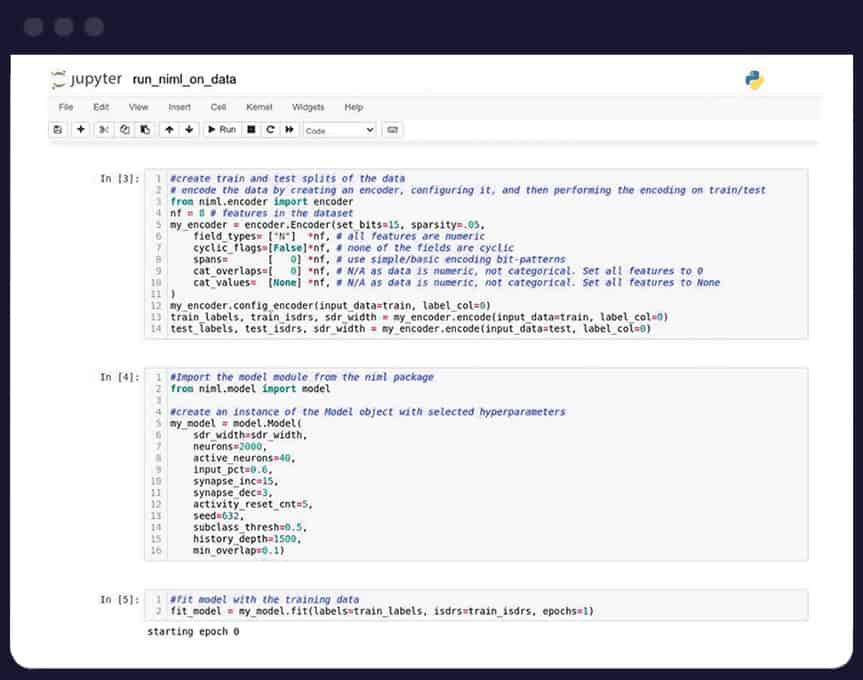

A New Approach to Neuromorphic Machine Learning

Our Pattern-based Machine Learning architecture pushes past the constraints of today’s AI/ML systems by delivering more useful, understandable and actionable information while reducing the time and cost associated with collecting and labeling training data. The Neuromorphic machine learning system provides:

Unsupervised Learning

Reduce need to curate and label data to build Machine learning model

Incremental Training

Add new classes without retraining entire model

Explainable Results

Delivers rich semantic information about prediction from input to output

Anomaly detection

High resolution decision boundaries clearly identify new signatures

Featured Blog Posts

The future of AI isn’t Artificial. It’s Natural.

Over the past several decades, our species has developed AI with one goal in mind: to mirror the thinking patterns of the human brain.

Pattern-based Machine Learning in Practice

Think of our pattern-based AI as an X-ray of your ML model – Allowing you to see the inner workings of its predictions.

Explainable AI: Why it Matters

AI can deliver amazing answers to seemingly insolvable problems, but it’s often a black box. You can’t see the “why” behind the answers.

Request A Demo

We’re building a world in which AI works the way people think, so that people can work smarter and live better. Data science can’t wait for incremental change, so we’re here to change AI forever.

We’d love to work with you.